OpenShift 4 on OpenStack Networking and Installation

OpenShift Containter Platform 4 is much more like Tectonic than OpenShift 3. Particularly when it comes to installation and node management. Rather then building machines and running an Ansible playbook to configure them you now have the option of setting a fewer paramters in an install config running an installer to build and configure the cluster from scratch.

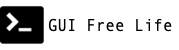

I would like to illustrate how the basics of the networking might look when installing OpenShift on OpenStack. I also wanted an excuse to try out a new iPad sketch app. These notes are based on recent 4.4 nightly builds on OSP 13 Queens.